Purpose of participation

Stock price prediction is pretty distant from the field of my interest, which is NLP.

But there are several purpose that I spent time participating in no-reward competition.

- Data wrangling practice: whatever datatype it be, I think it is important to wrangle as many data as possible. It is for the numpy & pandas practice

- Refreshing: I love studying the field of machine learning and deep learning. Although it is important to study deep enough, I think some refreshment helps the continuity of studying.

-

Somehow connected!

- The usage of cross validation

- Markov-chain vs matrix approach

- usage of sine, cosine function for timeseries annotation

- Regularization at ElasticNet might be also regarded as weight decay.

Using ElasticNetCV for stock price prediction

Adding more data

- external data: WTI, NASDAQ, was tested, but it was no good

- derivative data: after @SangHoeKim constructed derivative data, I added VWAP and SMI but didn't increase the model's performance

Choosing Adaquate Model

- When choosing a model, it was important that the model 1) don't fluctuate its performance over periods of time, 2) has higher performance than Linear Regression.

-

The original plan was to ensemble 1) time-series forecast model with 2) regression model. Regression model ElasticNetCV turned out to be the best model, but ARIMA model's performance was too overfit for specific periods only to use. For example, ARIMA was good for predicting overall bear market / overall bull market but was bad at predicting fluctuation between the spand of 5 days.

- ElasticNetCV was the right choice. It was advanced version of Linear Regression, and it has provided K-fold validation structure so that it comparably had more robustness compared to ElasticNet.

- ARIMA(0,1,1) showed pretty good performance. This was out of expectation, since (p, q, d) = (0, 1, 1) is just moving average model with constant uprise/downfall of differentiation.

- RandomForestRegressor and pmdARIMA was selected since its criteria can be customized as MAE instead of MSE.

- pmdARIMA is implementation of AutoARIMA from RStudio, but its performance was too low to use.

- XGBoostRegressor turned out to be not good. Not only this was my team's conclusion, but the general consensus over the Dacon community was similar. However @tjy3090 pointed out that XGB captured the turning point and the pattern of the graph, even though its NMAE score was low.

- Compared between ffill vs dropna(), but concluded that ffill outperformed on more recend dates.

| Model | NMAE (2021-09-06 ~ 2021-09-10) | NMAE (2021-09-15 ~ 2021-09-24) |

|---|---|---|

| ElasticNetCV | 3.02 | 2.93 |

| ARIMA(0,1,1) | 3.03 | - |

| ElasticNet | 3.12 | - |

| XGBoost | 3.87 | - |

| Linear Regression | 4.03 | - |

| RFRegressor | 4.11 | - |

| pmdARIMA | 8.81 | - |

- Experimented with ARIMA, pmdARIMA, ElasticNetCV, RandomForestRegressor and XGBoost.

- Competition's criteria is based on NMAE. Grading criteria function was constructed as following:

def NMAE(true_df, pred_df_input):

""" grading criteria for public leader board """

return (abs(true_df_copy - pred_df_input) / true_df_copy * 100).iloc[:5].values.mean()- Thanks for setting up grading criteria, the team was able to experiment different kinds of models without being limited by submission quota.

Code and Outputs

# import python modules

import os

import copy

# import data wrangling modules

import pandas as pd

import numpy as np

# import visualization modules

from tqdm import tqdm

import matplotlib.pyplot as plt

import seaborn as sns

# import dataloader & stock data downloader

import FinanceDataReader as fdr

# import machine learning modules

from sklearn.preprocessing import MinMaxScaler, RobustScaler, StandardScaler

from sklearn.model_selection import train_test_split

# import machine learning models

from sklearn.linear_model import LinearRegression, ElasticNet, ElasticNetCV

from xgboost import XGBRegressor

# import cell output cleaner

from IPython.display import clear_output

# import stock data derivatives module

from talib import RSI, BBANDS, MACD, ATRimport warnings

warnings.filterwarnings('ignore') # remove warnings from pandas# set options pandas: display more columns and reduced digits of float numbers

pd.set_option('display.max_columns', 100)

pd.options.display.float_format = '{:.4f}'.formatpath = './open'

list_name = 'Stock_List.csv'

stock_list = pd.read_csv(os.path.join(path,list_name))

stock_list['종목코드'] = stock_list['종목코드'].apply(lambda x : str(x).zfill(6))

display(stock_list.head())

display(stock_list.tail())| 종목명 | 종목코드 | 상장시장 | |

|---|---|---|---|

| 0 | 삼성전자 | 005930 | KOSPI |

| 1 | SK하이닉스 | 000660 | KOSPI |

| 2 | NAVER | 035420 | KOSPI |

| 3 | 카카오 | 035720 | KOSPI |

| 4 | 삼성바이오로직스 | 207940 | KOSPI |

| 종목명 | 종목코드 | 상장시장 | |

|---|---|---|---|

| 371 | 더네이쳐홀딩스 | 298540 | KOSDAQ |

| 372 | 코엔텍 | 029960 | KOSDAQ |

| 373 | 원익홀딩스 | 030530 | KOSDAQ |

| 374 | 웹케시 | 053580 | KOSDAQ |

| 375 | 신흥에스이씨 | 243840 | KOSDAQ |

# define dates

""" Make dataset for training & evaluation """

from datetime import datetime

import os

# determines whether it is public leaderboard period or new_public period

BOOL_PUBLIC_PERIOD = False

# Determines whether to use public way or private way to utilize data

BOOL_PUBLIC = False

# set data window range

start_date = None # if this was set to 2021-01-04, then the mae shoots up to from 3.0 to 4.7

today = datetime.now()

data_end_date = today.strftime('%Y-%m-%d')

# public_start_date = '2021-09-10'

public_start_4th = '2021-09-06'

public_end_date = '2021-09-10'

check_point = '2021-09-14'

recent_known_date = '2021-09-24'

private_start_4th = '2021-09-27'

private_end_4th = '2021-10-01'if BOOL_PUBLIC_PERIOD:

sample_name = 'sample_submission.csv' # week 4 submission

pred_df = pd.read_csv(os.path.join(path,sample_name)).set_index('Day')

else:

new_public_name = "new_public.csv"

pred_df = pd.read_csv(os.path.join(path,new_public_name)).set_index('Day')

pred_df| 000060 | 000080 | 000100 | 000120 | 000150 | 000240 | 000250 | 000270 | 000660 | 000670 | 000720 | 000810 | 000880 | 000990 | 001230 | 001440 | 001450 | 001740 | 002380 | 002790 | 003000 | 003090 | 003380 | 003410 | 003490 | 003670 | 003800 | 004000 | 004020 | 004170 | 004370 | 004490 | 004800 | 004990 | 005250 | 005290 | 005300 | 005380 | 005385 | 005387 | 005490 | 005830 | 005850 | 005930 | 005935 | 005940 | 006260 | 006280 | 006360 | 006400 | ... | 253450 | 256840 | 263720 | 263750 | 267250 | 267980 | 268600 | 271560 | 272210 | 272290 | 273130 | 278280 | 278530 | 282330 | 285130 | 287410 | 290510 | 290650 | 292150 | 293490 | 293780 | 294090 | 294870 | 298000 | 298020 | 298050 | 298380 | 298540 | 299030 | 299660 | 299900 | 307950 | 314130 | 316140 | 319400 | 319660 | 321550 | 323990 | 326030 | 330590 | 330860 | 336260 | 336370 | 347860 | 348150 | 348210 | 352820 | 357780 | 363280 | 950130 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Day | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 2021-09-15 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2021-09-16 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2021-09-17 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2021-09-23 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2021-09-24 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2021-09-27 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2021-09-28 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2021-09-29 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2021-09-30 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2021-10-01 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

10 rows × 376 columns

""" fill out ground truth stock price of the companies for the public submission period """

if BOOL_PUBLIC_PERIOD:

blank_submission = pd.read_csv(os.path.join(path,sample_name)).set_index('Day')

true_df = blank_submission.copy()

true_df_first_day = public_start_4th

true_df_last_day = public_end_date

else:

df_new_public = pd.read_csv(os.path.join(path,new_public_name)).set_index('Day')

true_df = df_new_public.copy()

true_df_first_day = true_df.iloc[0].name

true_df_last_day = true_df.iloc[-1].name

print(true_df_first_day, true_df_last_day)2021-09-15 2021-10-01for company_code in tqdm(true_df.columns):

data_raw = fdr.DataReader(company_code, start = start_date, end = private_start_4th).reset_index()

data_raw = data_raw.drop(columns = ['Change'])

data_raw = data_raw.replace(0, np.nan).ffill()

data_raw.index = data_raw.Date

# print(data_raw.head())

public_true_closes = data_raw.loc[true_df_first_day:true_df_last_day].Close.iloc[:]

# print(public_true_closes)

true_df.loc[:,company_code] = public_true_closes.to_list() * 2

true_df.head()100%|██████████| 376/376 [00:50<00:00, 7.50it/s]| 000060 | 000080 | 000100 | 000120 | 000150 | 000240 | 000250 | 000270 | 000660 | 000670 | 000720 | 000810 | 000880 | 000990 | 001230 | 001440 | 001450 | 001740 | 002380 | 002790 | 003000 | 003090 | 003380 | 003410 | 003490 | 003670 | 003800 | 004000 | 004020 | 004170 | 004370 | 004490 | 004800 | 004990 | 005250 | 005290 | 005300 | 005380 | 005385 | 005387 | 005490 | 005830 | 005850 | 005930 | 005935 | 005940 | 006260 | 006280 | 006360 | 006400 | ... | 253450 | 256840 | 263720 | 263750 | 267250 | 267980 | 268600 | 271560 | 272210 | 272290 | 273130 | 278280 | 278530 | 282330 | 285130 | 287410 | 290510 | 290650 | 292150 | 293490 | 293780 | 294090 | 294870 | 298000 | 298020 | 298050 | 298380 | 298540 | 299030 | 299660 | 299900 | 307950 | 314130 | 316140 | 319400 | 319660 | 321550 | 323990 | 326030 | 330590 | 330860 | 336260 | 336370 | 347860 | 348150 | 348210 | 352820 | 357780 | 363280 | 950130 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Day | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 2021-09-15 | 30400 | 34700 | 65400 | 170500 | 96600 | 16800 | 51800 | 83300 | 107500 | 728000 | 54600 | 232000 | 35400 | 58500 | 20050 | 2770 | 25300 | 5470 | 455500 | 53200 | 21550 | 36200 | 9630 | 8480 | 32550 | 161500 | 50900 | 90500 | 52000 | 275000 | 294000 | 90600 | 122500 | 35400 | 32800 | 28350 | 151500 | 209500 | 102500 | 99700 | 361500 | 61800 | 28500 | 77000 | 71800 | 13400 | 68000 | 363000 | 45000 | 750000 | ... | 83100 | 43100 | 40500 | 84300 | 68600 | 73900 | 64100 | 129500 | 18800 | 39000 | 109300 | 279500 | 13545 | 179500 | 298500 | 7900 | 7840 | 38350 | 13910 | 72400 | 51000 | 52900 | 28450 | 401500 | 748000 | 753000 | 19600 | 32950 | 62400 | 108000 | 16300 | 107500 | 43800 | 11000 | 3505 | 35750 | 20000 | 78300 | 113000 | 5570 | 47600 | 51000 | 65700 | 33550 | 29850 | 59600 | 279500 | 302700 | 28250 | 17450 |

| 2021-09-16 | 30600 | 34700 | 64800 | 168000 | 102000 | 16800 | 50900 | 84500 | 104000 | 718000 | 55700 | 227000 | 35300 | 56700 | 20500 | 2705 | 24900 | 5450 | 458000 | 52600 | 21200 | 34900 | 9540 | 8460 | 32100 | 163000 | 51000 | 91300 | 51600 | 271500 | 293000 | 90300 | 124500 | 35000 | 32650 | 29450 | 151500 | 208000 | 102500 | 99800 | 364500 | 61400 | 27800 | 76100 | 71500 | 13250 | 68500 | 365000 | 46100 | 725000 | ... | 82300 | 43600 | 40500 | 82000 | 69800 | 73300 | 60500 | 125000 | 18850 | 38900 | 109365 | 276300 | 13425 | 174500 | 297500 | 7760 | 7680 | 39700 | 13745 | 71300 | 49750 | 53000 | 28850 | 419500 | 756000 | 777000 | 19400 | 32600 | 61900 | 113500 | 16150 | 109000 | 46800 | 11000 | 3515 | 35000 | 19850 | 80300 | 114000 | 5530 | 47400 | 51100 | 66000 | 33200 | 30150 | 58400 | 276500 | 294700 | 28150 | 16850 |

| 2021-09-17 | 30350 | 34050 | 64500 | 169000 | 100000 | 16550 | 51700 | 84100 | 107000 | 718000 | 56200 | 227500 | 35050 | 57600 | 19950 | 2665 | 24750 | 5350 | 454000 | 53100 | 21100 | 34950 | 9570 | 8350 | 32950 | 161000 | 50900 | 91100 | 51500 | 271000 | 290000 | 89500 | 123000 | 34750 | 32950 | 30950 | 149000 | 209000 | 99600 | 97500 | 362500 | 60800 | 27600 | 77200 | 71800 | 13400 | 67400 | 358500 | 46150 | 725000 | ... | 82500 | 49750 | 39950 | 82400 | 65300 | 72400 | 61500 | 126000 | 19200 | 39450 | 109225 | 269400 | 13435 | 175000 | 288000 | 7700 | 7700 | 39550 | 13835 | 72200 | 50600 | 53900 | 28600 | 423000 | 733000 | 810000 | 19500 | 32450 | 61800 | 118000 | 16300 | 108000 | 46950 | 11050 | 3505 | 38300 | 19900 | 80900 | 113500 | 5560 | 47900 | 50500 | 65100 | 33050 | 30150 | 59500 | 276500 | 296900 | 28050 | 17300 |

| 2021-09-23 | 30100 | 33700 | 63700 | 167500 | 100500 | 16200 | 51600 | 84200 | 105500 | 699000 | 54600 | 227500 | 34950 | 56900 | 19000 | 2580 | 24850 | 5300 | 443500 | 51700 | 20800 | 34950 | 9560 | 8330 | 34200 | 158000 | 51100 | 94100 | 48750 | 269500 | 289000 | 87200 | 122000 | 34550 | 32750 | 30950 | 149000 | 208500 | 100000 | 98300 | 346500 | 63000 | 27150 | 77400 | 71800 | 13250 | 66300 | 354500 | 44950 | 731000 | ... | 84100 | 52800 | 38700 | 82200 | 64400 | 73100 | 59000 | 124000 | 19000 | 41150 | 109125 | 268000 | 13410 | 171500 | 289500 | 7270 | 7590 | 38500 | 13850 | 68900 | 45900 | 52600 | 28050 | 400500 | 712000 | 799000 | 20200 | 31450 | 60500 | 118000 | 16800 | 107000 | 44850 | 11100 | 3395 | 36800 | 19100 | 79700 | 109500 | 5550 | 46750 | 50100 | 65400 | 32000 | 29250 | 56800 | 270500 | 290100 | 28150 | 20450 |

| 2021-09-24 | 29600 | 33400 | 62900 | 166500 | 97000 | 16300 | 51100 | 82600 | 104000 | 696000 | 53200 | 228500 | 34650 | 56700 | 18700 | 2525 | 25000 | 5300 | 455000 | 50800 | 20450 | 34300 | 9540 | 8250 | 34250 | 162000 | 49350 | 93000 | 48650 | 272000 | 289500 | 85400 | 122500 | 34600 | 31950 | 31000 | 149000 | 207000 | 99500 | 98200 | 345000 | 62700 | 27700 | 77300 | 71700 | 13150 | 66100 | 344500 | 44400 | 726000 | ... | 85100 | 54500 | 40950 | 87400 | 64100 | 72900 | 59000 | 122000 | 18500 | 41300 | 108840 | 272900 | 13435 | 172500 | 283000 | 7780 | 7530 | 38950 | 13825 | 68300 | 45400 | 51500 | 28000 | 400500 | 713000 | 860000 | 19800 | 31500 | 60700 | 110900 | 16650 | 105500 | 45050 | 11150 | 3430 | 36900 | 18800 | 76700 | 107000 | 5530 | 46700 | 49050 | 67600 | 31700 | 29050 | 56900 | 268000 | 291000 | 28600 | 20050 |

5 rows × 376 columns

def makeData(data):

"""

- make derivative data from the fdr dataset

- not using external dataset which increases noise that harms the performance

"""

data = data.copy()

# Korean won volume

data['KRW_Vol'] = data[['Close', 'Volume']].prod(axis=1)

data['KRW_Vol_1m'] = data.KRW_Vol.rolling(21).mean()

# RSI

data['RSI'] = RSI(data.Close)

# Bollinger Bands

def compute_bb(close):

high, mid, low = BBANDS(close, timeperiod=20)

return pd.DataFrame({'bb_high': high, 'bb_low': low}, index=close.index)

data[['bb_high', 'bb_low']] = compute_bb(data.Close)

data['bb_high'] = data.bb_high.sub(data.Close).div(data.bb_high).apply(np.log1p)

data['bb_low'] = data.Close.sub(data.bb_low).div(data.Close).apply(np.log1p)

# ATR

def compute_atr(stock_data):

df = ATR(stock_data.High, stock_data.Low,

stock_data.Close, timeperiod=14)

return df.sub(df.mean()).div(df.std())

data['ATR'] = compute_atr(data)

#MACD

def compute_macd(close):

macd = MACD(close)[0]

return (macd - np.mean(macd))/np.std(macd)

data['MACD'] = compute_macd(data.Close)

# Lagged Returns

lags = [1, 2, 3, 4, 5, 10, 21, 42, 63]

for lag in lags:

data[f'return_{lag}d'] = data.Close.pct_change(lag).add(1).pow(1 / lag).sub(1)

for t in [1, 2, 3, 4, 5]:

for lag in [1, 5, 10, 21]:

data[f'return_{lag}d_lag{t}'] = data[f'return_{lag}d'].shift(t * lag)

# target return

for t in [1, 2, 3, 4, 5]:

data[f'target_{t}d'] = data[f'return_{t}d'].shift(-t)

# volume change

q = 0.01

data[data.filter(like='Vol').columns] = data.filter(like='Vol').pct_change().apply(lambda x: x.clip(lower=x.quantile(q),upper=x.quantile(1 - q)))

data = data.drop(['Date', 'Open', 'High', 'Low', 'Close'], axis= 1)

data = data.fillna(method="ffill")

data = data.fillna(method="bfill")

# display(data.tail())

return datadef mape(true_df, pred_df_input):

""" grading criteria for public leader board """

# extract columns from true_df same as pred_df_input

true_df_copy = true_df.copy()

true_df_copy = true_df_copy.loc[:, pred_df_input.columns]

true_df_copy = true_df_copy.iloc[:5]

pred_df_input = pred_df_input.iloc[:5]

return (abs(true_df_copy - pred_df_input) / true_df_copy * 100).iloc[:5].values.mean()def sklearn_predict(

pred_df_input,

model,

bool_public,

start_date,

recent_known_date,

clip=True,

scaler=RobustScaler()

):

""" make prediction dataframe """

if bool_public:

# get first five rows of pred_df_input

pred_df_input = pred_df_input.iloc[:5]

else:

# dropping first 5 days of public dates from the submission dataframe

pred_df_input = pred_df_input.iloc[5:]

for company_code in tqdm(pred_df_input.columns):

# clear_output()

data_raw = fdr.DataReader(

company_code,

start = start_date,

end = recent_known_date

).reset_index()

data_raw = data_raw.drop(columns = 'Change')

data_raw = data_raw.replace(0, np.nan).ffill()

data_raw.index = data_raw.Date

# clear_output() # clear output for the jupyter notebook

if bool_public: # if public submission

# make necessary data for the prediction

data = makeData(data_raw)

# get the last date of the public submission period

public_last_close = data_raw.loc[recent_known_date].Close

public = data.loc[[recent_known_date]]

# loc[] is inclusive for the end slicing date, unlike list slicing

train_indv_comp = data.loc[:recent_known_date]

Ys = train_indv_comp.filter(like='target')

X = train_indv_comp.drop(Ys.columns, axis=1)

Ys_public = public.filter(like='target')

X_public = public.drop(Ys_public.columns, axis=1)

X = scaler.fit_transform(X)

X_public = scaler.transform(X_public)

pred_public = []

public_close = public_last_close

for y_col in Ys.columns:

model.fit(X, Ys[y_col])

r_pred_public = model.predict(X_public)

public_close = public_close * (1+r_pred_public[0])

pred_public.append(public_close)

# print(pred_df_input.shape)

# display(pred_df_input)

pred_df_input.loc[:,company_code] = pred_public

else: # if private submission

data = makeData(data_raw)

# display(pred_df_input)

private_last_close = data_raw.loc[recent_known_date].Close

# display(data)

private = data.loc[[recent_known_date]]

# display(private)

train_indv_comp = data.loc[:recent_known_date]

# display(train_indv_comp)

# make train_indv_comp data

Ys = train_indv_comp.filter(like='target') #Consisted of mon, tue, wed, thur, fri data columns

X = train_indv_comp.drop(Ys.columns, axis=1)

# make private data

Ys_private = private.filter(like='target')

X_private = private.drop(Ys_private.columns, axis=1)

# fit scaler

X = scaler.fit_transform(X)

X_private = scaler.transform(X_private)

pred_private = []

private_close = private_last_close

for y_col in Ys.columns:

model.fit(X, Ys[y_col])

r_pred_private = model.predict(X_private)

private_close = private_close * (1+r_pred_private[0])

pred_private.append(private_close)

# print(pred_private)

pred_df_input.loc[:,company_code] = pred_private

# display(pred_df_input)

return pred_df_inputCheck the performance for sampled 20 companies

""" sample prediction """

# Utilizing ElasticnetCV to automatically tune the alpha hyperparameter with cross validation.

# (rho is left at default value 0.5)

# ElasticnetCV Documentation: https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.ElasticNetCV.html#sklearn.linear_model.ElasticNetCV

# Cross Validation documentation: https://scikit-learn.org/stable/modules/cross_validation.html#cross-validation

# Builtin Cross Validation provided models: https://scikit-learn.org/stable/glossary.html#term-cross-validation-estimator

"""

https://stackoverflow.com/questions/12283184/how-is-elastic-net-used

To automatically tune the value of alpha it is indeed possible to use ElasticNetCV which will spare redundant computation as apposed to using GridSearchCV in the ElasticNet class for tuning alpha.

In complement, you can use a regular GridSearchCV for finding the optimal value of rho.

"""

# name = "ElasticNet_ALL_External_Dataset"

name = "ElaticNetCV_private"

# random sample 20 companies

PRED_NUM = 20

if PRED_NUM != None:

pred_df_sampled = pred_df.copy()

pred_df_sampled = pred_df_sampled.sample(PRED_NUM, axis=1, random_state=616)

scaler = StandardScaler()

model = ElasticNetCV(max_iter=1000000) # default K-Fold is 5 folds

df_result_sampled = sklearn_predict(

pred_df_input = pred_df_sampled,

model = model,

bool_public= True,

start_date=start_date,

recent_known_date = check_point,

clip=True,

scaler=scaler

)df_result_sampled_copy = df_result_sampled.copy()

df_result_sampled_copy| 185750 | 024110 | 206650 | 038500 | 067630 | 241560 | 298000 | 035900 | 005387 | 000990 | 293490 | 078130 | 035420 | 019170 | 217270 | 319660 | 161390 | 035600 | 014680 | 041510 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Day | ||||||||||||||||||||

| 2021-09-15 | 125064.7540 | 10254.9362 | 42618.1827 | 5372.9996 | 19360.0847 | 42520.2396 | 398721.4753 | 40413.6252 | 99356.3084 | 58953.4027 | 72497.5907 | 5427.5472 | 403990.9318 | 67500.6979 | 18626.1257 | 35912.5445 | 45053.3026 | 18723.2635 | 327323.1354 | 64794.6523 |

| 2021-09-16 | 125139.3222 | 10258.2773 | 42721.3990 | 5372.6779 | 19387.2423 | 42534.2163 | 399329.2842 | 40504.0768 | 99247.8148 | 58987.6293 | 72439.7577 | 5433.1925 | 405246.7272 | 67571.6898 | 18639.1341 | 35965.5198 | 45040.3133 | 18740.6921 | 327575.8054 | 64930.5748 |

| 2021-09-17 | 125205.0949 | 10261.0199 | 42818.9030 | 5371.4292 | 19410.6680 | 42545.0733 | 399883.2743 | 40574.4049 | 99185.6754 | 59015.3259 | 72452.6539 | 5438.2213 | 406134.5774 | 67632.3829 | 18649.8843 | 36014.5010 | 45030.5329 | 18759.7012 | 327804.9015 | 64994.3200 |

| 2021-09-23 | 125155.5177 | 10263.4246 | 42915.5822 | 5369.6404 | 19432.5767 | 42553.3841 | 400448.6410 | 40646.8703 | 99134.4965 | 59039.3481 | 72379.0831 | 5442.9652 | 406902.2789 | 67687.9173 | 18659.4037 | 36058.6550 | 45023.5971 | 18773.1005 | 328019.5180 | 65052.2335 |

| 2021-09-24 | 125093.3296 | 10265.6863 | 43001.4697 | 5367.4838 | 19453.5579 | 42561.5443 | 401019.1642 | 40716.1010 | 99099.2499 | 59061.2745 | 72294.9027 | 5447.5175 | 407592.0695 | 67740.2365 | 18667.9796 | 36102.0524 | 45011.9833 | 18785.5887 | 328226.2354 | 65100.9893 |

mape_value = mape(true_df, df_result_sampled_copy)

print(mape_value)2.9318977420110794전체

""" training time: 3444 second(or 57 minutes) """

# name = "ElasticNet_ALL_External_Dataset"

name = "ElasticNetCV_private"

# predict for all 376 companies

PRED_NUM = None

scaler = StandardScaler()

model = ElasticNetCV(max_iter=1000000) # default K-Fold is 5 folds

df_result_all = sklearn_predict(

pred_df,

model,

bool_public= BOOL_PUBLIC,

start_date=start_date,

recent_known_date = recent_known_date,

clip=True,

scaler=scaler

)100%|██████████| 376/376 [1:02:57<00:00, 10.05s/it]display(df_result_all)| 000060 | 000080 | 000100 | 000120 | 000150 | 000240 | 000250 | 000270 | 000660 | 000670 | 000720 | 000810 | 000880 | 000990 | 001230 | 001440 | 001450 | 001740 | 002380 | 002790 | 003000 | 003090 | 003380 | 003410 | 003490 | 003670 | 003800 | 004000 | 004020 | 004170 | 004370 | 004490 | 004800 | 004990 | 005250 | 005290 | 005300 | 005380 | 005385 | 005387 | 005490 | 005830 | 005850 | 005930 | 005935 | 005940 | 006260 | 006280 | 006360 | 006400 | ... | 253450 | 256840 | 263720 | 263750 | 267250 | 267980 | 268600 | 271560 | 272210 | 272290 | 273130 | 278280 | 278530 | 282330 | 285130 | 287410 | 290510 | 290650 | 292150 | 293490 | 293780 | 294090 | 294870 | 298000 | 298020 | 298050 | 298380 | 298540 | 299030 | 299660 | 299900 | 307950 | 314130 | 316140 | 319400 | 319660 | 321550 | 323990 | 326030 | 330590 | 330860 | 336260 | 336370 | 347860 | 348150 | 348210 | 352820 | 357780 | 363280 | 950130 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Day | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 2021-09-27 | 29619.9213 | 33404.8682 | 62934.1627 | 166627.0991 | 96951.3550 | 16318.2245 | 51157.0890 | 82640.1175 | 104056.0722 | 696633.1831 | 53210.2029 | 228543.4327 | 34616.2408 | 56750.9950 | 18713.0184 | 2525.4144 | 25020.4805 | 5300.8629 | 455412.5823 | 50759.8474 | 20476.7460 | 34335.2240 | 9536.7369 | 8255.9627 | 34271.9425 | 162211.5139 | 49392.2069 | 92991.9337 | 48689.8328 | 272236.9822 | 289666.8211 | 85478.4144 | 122615.8457 | 34643.0840 | 31984.6833 | 31032.8182 | 149020.7516 | 206984.5334 | 99366.3220 | 98193.8653 | 345410.9456 | 62765.9660 | 27726.3380 | 77250.9716 | 71617.0677 | 13158.5612 | 66146.4555 | 344804.7013 | 44426.1867 | 726008.7348 | ... | 85134.8412 | 55242.7675 | 41037.1551 | 87580.0575 | 64106.1314 | 72915.4644 | 59209.0412 | 122193.8499 | 18533.3206 | 41371.7568 | 108823.5392 | 273798.8944 | 13438.8918 | 172536.5767 | 282948.8369 | 7795.4170 | 7536.9262 | 39055.3084 | 13833.1392 | 68344.4815 | 45389.0326 | 51583.5746 | 27979.6905 | 400906.3756 | 710964.3401 | 871526.8897 | 19831.0911 | 31559.7435 | 60848.2452 | 111160.9148 | 16692.1713 | 105428.1348 | 45187.3560 | 11146.7696 | 3438.2884 | 36965.8713 | 18833.2831 | 76519.7910 | 106993.1205 | 5528.7665 | 46765.8625 | 49179.3201 | 67486.5771 | 31774.1941 | 29074.3546 | 56910.9233 | 268362.2621 | 290074.0721 | 28644.1991 | 20074.8843 |

| 2021-09-28 | 29641.2372 | 33410.9766 | 62996.9338 | 166704.4970 | 97004.0898 | 16335.1979 | 51198.9499 | 82659.9783 | 104066.5543 | 697048.4354 | 53154.2625 | 228638.8170 | 34595.6852 | 56783.5609 | 18720.7457 | 2524.9961 | 25035.1632 | 5299.6526 | 455698.5859 | 50696.7335 | 20497.6473 | 34358.2641 | 9531.8428 | 8259.1139 | 34295.1064 | 162433.0638 | 49423.3173 | 92950.0978 | 48739.4518 | 272558.1387 | 289818.6419 | 85665.7054 | 122699.8400 | 34683.6341 | 32012.5879 | 31013.3876 | 149093.8104 | 207123.2654 | 99285.9642 | 98222.7768 | 345734.1227 | 62823.5848 | 27745.6550 | 77270.0037 | 71642.3679 | 13163.4640 | 66179.3712 | 344998.0796 | 44450.9740 | 726398.5421 | ... | 85150.5989 | 55572.7020 | 41155.7774 | 87730.7834 | 64107.7192 | 72911.9722 | 59367.1055 | 122342.1253 | 18561.5405 | 41391.4693 | 108832.3748 | 274609.9104 | 13443.2515 | 172502.3226 | 282953.2805 | 7811.3651 | 7541.5022 | 39147.3212 | 13840.5457 | 68263.6724 | 45478.3515 | 51629.3426 | 27953.9254 | 400845.8235 | 710307.6560 | 880941.8171 | 19848.3055 | 31603.2093 | 60986.5374 | 111366.8870 | 16730.7055 | 105360.5544 | 45304.5754 | 11141.9899 | 3440.4603 | 37020.5905 | 18850.3581 | 76408.3022 | 106751.9680 | 5527.3493 | 46823.7669 | 49393.8403 | 67488.7739 | 31799.0865 | 29069.9619 | 56848.9364 | 269112.6391 | 289045.8422 | 28671.6626 | 20092.6880 |

| 2021-09-29 | 29659.9998 | 33414.0403 | 63060.8037 | 166766.6097 | 97047.5968 | 16350.5709 | 51235.1900 | 82672.4479 | 104029.4717 | 697398.8750 | 53127.4203 | 228808.0665 | 34591.6177 | 56809.9294 | 18726.6707 | 2524.2865 | 25047.6200 | 5297.8121 | 455953.6829 | 50647.3469 | 20511.6095 | 34379.0331 | 9526.7009 | 8261.3723 | 34311.4601 | 162599.8111 | 49450.8603 | 92915.7797 | 48838.7770 | 272890.9565 | 289973.1638 | 85718.1060 | 122772.9330 | 34729.9229 | 32037.7311 | 31013.6800 | 149205.9845 | 207299.9896 | 99307.0351 | 98257.5142 | 346058.4688 | 62874.2882 | 27762.7063 | 77304.3423 | 71660.5777 | 13166.8532 | 66207.6025 | 345139.2794 | 44471.1243 | 726786.1160 | ... | 85155.3182 | 55919.4128 | 41232.5346 | 87873.5729 | 64103.3814 | 72905.3485 | 59515.5655 | 122422.1061 | 18588.3547 | 41409.0996 | 108841.1698 | 275375.6071 | 13447.6955 | 172457.9531 | 282988.4423 | 7825.7740 | 7545.5702 | 39236.0164 | 13847.9131 | 68146.6331 | 45566.7920 | 51948.7495 | 27927.4594 | 401388.8262 | 710597.3525 | 891051.5782 | 19860.8061 | 31642.8484 | 61120.5119 | 111560.2977 | 16767.3728 | 105286.1974 | 45412.5893 | 11136.9467 | 3442.4249 | 37077.8781 | 18866.4524 | 75868.4705 | 106511.4224 | 5526.0227 | 46879.8267 | 49616.5520 | 67502.8980 | 31817.8737 | 29062.0097 | 56912.9122 | 269886.0036 | 289047.2415 | 28689.7233 | 20106.9512 |

| 2021-09-30 | 29677.6329 | 33418.5014 | 63124.2603 | 166819.7420 | 97087.3846 | 16363.0247 | 51268.0538 | 82681.2358 | 104028.8458 | 697716.3061 | 53120.1486 | 228978.3769 | 34592.4368 | 56832.7125 | 18735.2131 | 2523.4326 | 25058.8927 | 5295.6598 | 456193.9527 | 50600.5772 | 20524.5186 | 34398.6680 | 9521.3283 | 8263.1191 | 34326.0686 | 162741.3691 | 49476.8197 | 92945.8520 | 48980.0360 | 273205.5961 | 290114.5844 | 85767.4181 | 122840.7642 | 34760.9855 | 32059.8130 | 31001.9968 | 149288.2221 | 207442.3514 | 99319.4612 | 98298.1543 | 346370.0238 | 62921.4168 | 27792.9857 | 77346.4759 | 71697.8110 | 13161.0052 | 66233.6612 | 345259.8600 | 44489.0416 | 727210.4963 | ... | 85183.3230 | 56093.8298 | 41308.5059 | 88007.7671 | 64100.4969 | 72897.1765 | 59654.9000 | 122468.4388 | 18614.0005 | 41424.6338 | 108850.1303 | 276138.1048 | 13451.9330 | 172398.1197 | 283351.6078 | 7842.5450 | 7548.6130 | 39325.1257 | 13855.0212 | 68041.5214 | 45651.3523 | 52262.2849 | 27902.0970 | 401944.6413 | 711507.5729 | 899799.3356 | 19871.2709 | 31679.9238 | 61253.1297 | 111754.5069 | 16804.7915 | 105233.4033 | 45517.8564 | 11131.7003 | 3444.3205 | 37129.6121 | 18875.7521 | 75596.5935 | 106310.3201 | 5524.5951 | 46926.6664 | 49832.2755 | 67486.1650 | 31820.3359 | 29041.0651 | 57020.0201 | 271019.1920 | 287825.4264 | 28709.3131 | 20119.0428 |

| 2021-10-01 | 29694.6207 | 33423.9375 | 63193.3635 | 166868.1106 | 97124.4400 | 16345.2020 | 51298.7895 | 82687.5453 | 104023.7073 | 698015.0172 | 53134.0349 | 229134.0139 | 34598.0681 | 56853.3770 | 18742.5141 | 2522.5170 | 25069.4874 | 5293.3238 | 456423.1230 | 50563.0390 | 20536.8711 | 34415.6411 | 9515.8036 | 8264.5175 | 34326.4535 | 162881.1631 | 49475.1053 | 92973.7446 | 49028.6449 | 273507.2655 | 290222.1305 | 85814.4315 | 122867.5208 | 34787.7937 | 32081.1379 | 30982.2917 | 149367.6134 | 207596.9442 | 99316.1011 | 98349.8378 | 346657.8080 | 62966.5126 | 27821.7097 | 77389.4860 | 71756.7589 | 13156.2628 | 66258.1970 | 345369.7252 | 44505.3942 | 727541.8449 | ... | 85210.5932 | 56443.1028 | 41380.6160 | 88139.9194 | 64095.7902 | 72888.7033 | 59793.2597 | 122519.5538 | 18640.2923 | 41437.6867 | 108859.2157 | 276890.2609 | 13456.2170 | 172334.4949 | 283873.0039 | 7889.8827 | 7550.9179 | 39412.9016 | 13862.0739 | 67979.0034 | 45735.5675 | 52611.6164 | 27877.6216 | 402492.1156 | 712434.5186 | 908492.8089 | 19880.8972 | 31718.5363 | 61385.7797 | 111941.7628 | 16845.4909 | 105197.0065 | 45590.5963 | 11126.2165 | 3446.0998 | 37179.4420 | 18881.2332 | 75544.5761 | 106053.9055 | 5522.9875 | 46959.3655 | 50044.8621 | 67442.7575 | 31815.1584 | 29013.0873 | 57201.4429 | 272120.7188 | 287296.5950 | 28730.4632 | 20130.6205 |

5 rows × 376 columns

name = "ElasticNetCV_private_last"

df_result_all.to_csv(f'predict/{name}.csv')# load result from csv

df_result_all = pd.read_csv(f'predict/{name}.csv')# apply retina display for clearer visualization

%config InlineBackend.figure_format = 'retina'

# Korean font path designation

import matplotlib.font_manager as fm

fontpath = './font/NanumBarunGothic.ttf'

font = fm.FontProperties(fname=fontpath, size=14)

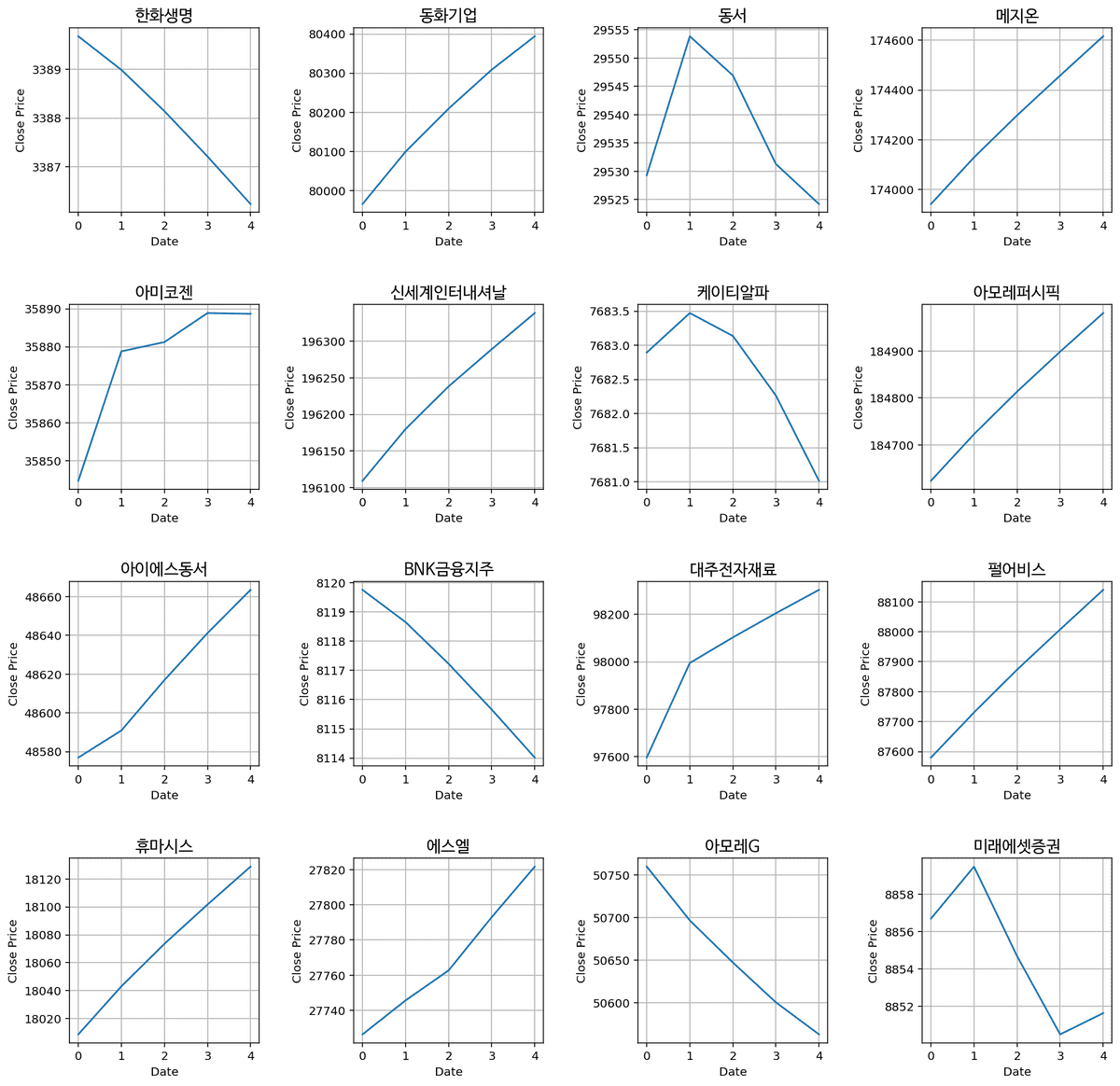

# sample 9 companies from stock_list

SAMPLE_NUM = 16

sampled_data = stock_list.sample(SAMPLE_NUM)

# visualize 16 companies subplots from df_result_all

fig, ax = plt.subplots(nrows=4, ncols=4, figsize=(16,16))

# make margin between figures

plt.subplots_adjust(hspace=0.5, wspace=0.5)

# visualize df_result_all

for i, code in enumerate(sampled_data["종목코드"]):

ax[i//4, i%4].plot(df_result_all.loc[:,code])

# find the matching row of sampled_data from code

company_name = stock_list[stock_list["종목코드"] == code]["종목명"].values[0]

ax[i//4, i%4].set_title(company_name, fontproperties=font)

ax[i//4, i%4].set_xlabel('Date')

ax[i//4, i%4].set_ylabel('Close Price')

ax[i//4, i%4].grid()

# check submission's validity

submission_last = pd.read_csv("./predict/ElasticNetCV_2021-09-26.csv")

# show information of the submission

submission_last.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 10 entries, 0 to 9

Columns: 377 entries, Day to 950130

dtypes: float64(376), object(1)

memory usage: 29.6+ KB